Introduction

Overview

Containers is a solution to the problem of how software should run reliably when transferred from one computer environment to another.

Docker exploded in 2013 and it has caused excitement in IT circles ever since.

Container technology powered by Docker promises to change the way IT works in the same way that virtualization did a few years ago.

What is a Container and why should it be used?

Containers are a solution to the problem of how to reliably run the software when moved from one computing environment to another. This can be from a developer’s laptop to a test environment, from a pre-production environment to a production environment, and it can be from a physical machine in a data center to a virtual machine in the cloud.

Problems arise when the supporting software environment is not the same, says Docker creator Solomon Hykes. “You would test in Python 2.7, and then it would run on Python 3 in production and something weird would happen. Or you will rely on the behavior of a certain version of the SSL library but another version will be installed instead. You’re going to run tests on Debian and run production in Red Hat and all sorts of weird things happen. "

What is the difference between containers and virtualization?

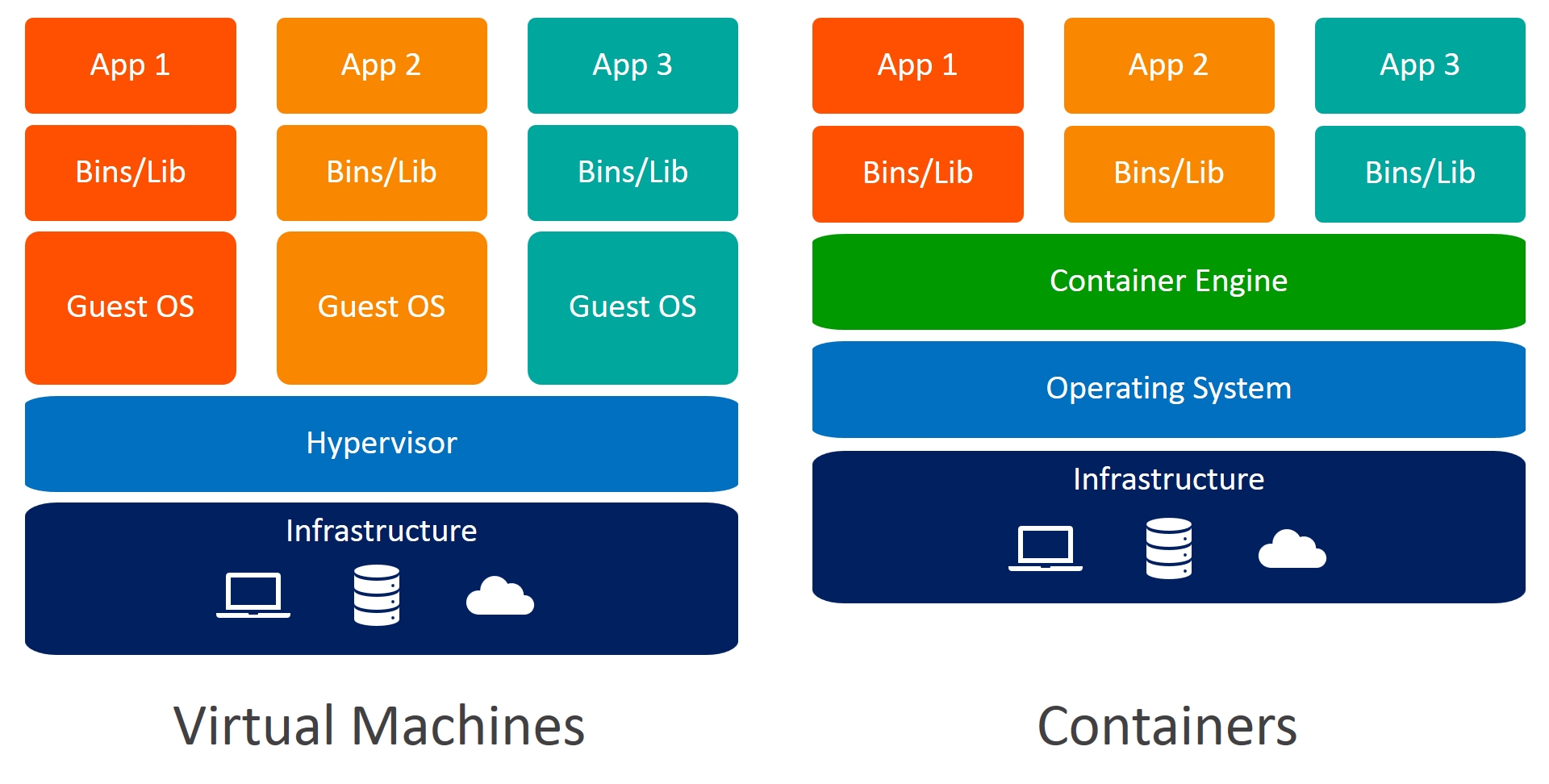

With virtualization technology, a package is a virtual machine and it includes the entire operating system as well as the application. A physical server running three virtual machines will have a hypervisor and three separate operating systems running on it.

In contrast, a server running three applications packaged with Docker runs one operating system, and each container shares the operating system kernel with the other containers. Shared parts of the operating system have read-only functionality, while each container has its partitions (i.e. how to access the container) for writing. That means containers are much lighter and use much fewer resources than virtual machines.